Transform your Raspberry Pi into a powerful voice recognition system using machine learning technologies that operate entirely offline. Recent breakthroughs in neural networks have made it possible to build a sophisticated AI assistant with Raspberry Pi that processes voice commands locally, ensuring both privacy and rapid response times. Modern speech recognition models can now achieve near-human accuracy levels while running efficiently on modest hardware, making the Pi an ideal platform for voice-enabled projects.

The convergence of TensorFlow Lite’s optimization techniques and the Pi’s improved processing capabilities has opened new possibilities for implementing real-time voice recognition systems without relying on cloud services. Whether you’re building a home automation controller, creating an accessible interface for the visually impaired, or developing an educational tool, machine learning voice recognition on the Pi offers a perfect blend of performance, affordability, and customization options.

Understanding Voice Recognition Models

Popular Voice Recognition Frameworks

Several popular voice recognition frameworks are well-suited for Raspberry Pi projects, each offering unique advantages. TensorFlow Lite stands out as a lightweight option specifically optimized for embedded devices, making it an excellent choice for Pi-based voice recognition applications. It offers good performance while maintaining a small footprint on resource-constrained devices.

Mozilla DeepSpeech provides an open-source alternative that works remarkably well on the Pi, particularly for offline voice recognition tasks. It’s especially popular among privacy-conscious developers who prefer to process voice data locally rather than relying on cloud services.

CMU Sphinx, though older, remains a reliable choice for Raspberry Pi implementations. It offers a comprehensive toolkit for speech recognition and requires minimal setup, making it perfect for beginners. The framework includes pre-trained acoustic models and can be customized for specific use cases.

For those seeking cloud-connected solutions, both Google’s Speech-to-Text API and Amazon’s Alexa Voice Service can be integrated with Raspberry Pi. While these require internet connectivity, they provide superior accuracy and regular updates to their recognition models.

Model Architecture and Requirements

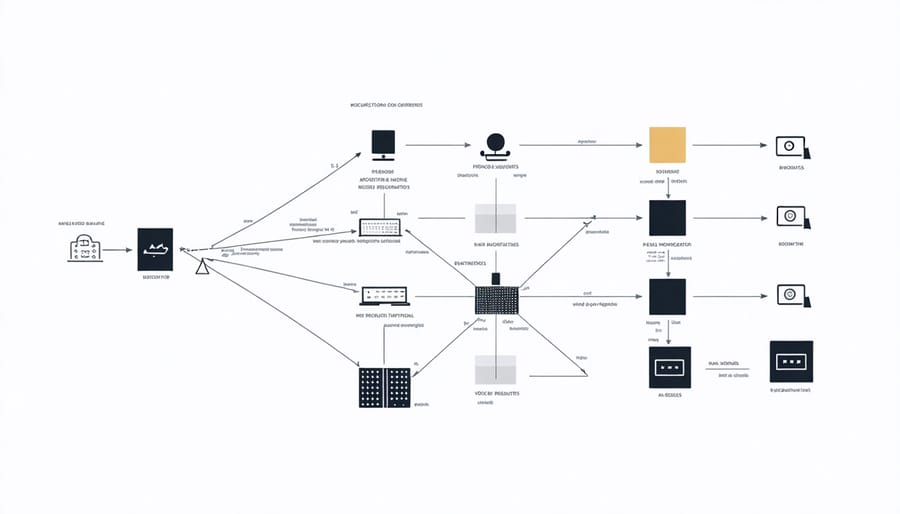

Voice recognition systems typically employ deep neural networks, particularly Recurrent Neural Networks (RNNs) or Convolutional Neural Networks (CNNs), as their foundation. The most common architecture combines these with Hidden Markov Models (HMM) to process audio inputs effectively.

For implementing voice recognition on a Raspberry Pi, you’ll need at minimum:

– Raspberry Pi 3 or newer

– 2GB RAM (4GB recommended)

– Class 10 microSD card (16GB minimum)

– USB microphone or compatible audio HAT

– Stable power supply (2.5A recommended)

The software stack usually includes:

– Python 3.7 or higher

– TensorFlow Lite or PyTorch

– Speech recognition libraries (like CMU Sphinx or DeepSpeech)

– Audio processing libraries (PyAudio, librosa)

The model architecture typically follows three main stages:

1. Feature extraction: Converting audio into spectrograms or MFCC features

2. Neural network processing: Pattern recognition in the extracted features

3. Output generation: Converting recognized patterns into text

For optimal performance on resource-constrained devices like the Raspberry Pi, consider using quantized models or edge-optimized frameworks. This helps balance accuracy with processing speed while working within hardware limitations.

Setting Up Your Voice Recognition System

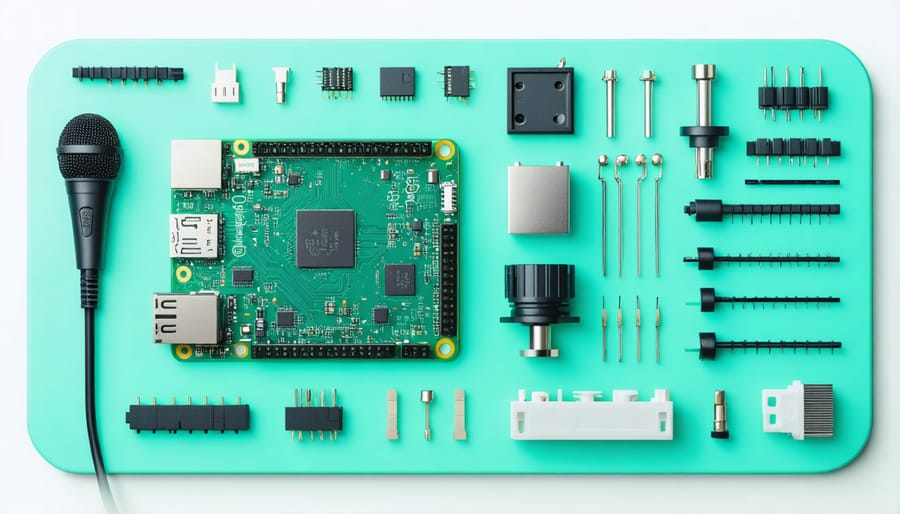

Hardware Requirements

To implement machine learning voice recognition effectively, you’ll need specific hardware components that meet the processing demands of real-time audio analysis. At the core of your setup, we recommend using a Raspberry Pi 4 with at least 4GB RAM – you can check the detailed Raspberry Pi 4 hardware specifications for compatibility. You’ll also need a quality USB microphone or a compatible microphone HAT for clear audio input.

Essential components include:

– Raspberry Pi 4 (4GB or 8GB RAM model)

– Class 10 microSD card (32GB minimum)

– 5V/3A power supply

– USB microphone or ReSpeaker 2-Mics Pi HAT

– Optional: Small cooling fan or heatsinks

For optimal performance, ensure your microphone placement is in a relatively quiet environment with minimal echo. The microphone should be positioned within 1-2 feet of the sound source for best results. While basic voice recognition tasks can run on the standard hardware, adding a cooling solution is recommended for extended usage as machine learning processes can be computationally intensive.

Software Installation

Before diving into voice recognition implementation, we’ll need to set up our development environment with the necessary software components. First, ensure your Raspberry Pi is running the latest version of Raspberry Pi OS, as this provides the most stable foundation for our voice recognition project.

Open your terminal and update your system:

“`

sudo apt-get update

sudo apt-get upgrade

“`

Next, install Python 3 and pip if they aren’t already on your system:

“`

sudo apt-get install python3 python3-pip

“`

For voice recognition capabilities, we’ll need several key libraries. Install them using pip:

“`

pip3 install SpeechRecognition

pip3 install pyaudio

pip3 install numpy

pip3 install tensorflow-lite

“`

You’ll also need to install some system-level dependencies for audio processing:

“`

sudo apt-get install portaudio19-dev python3-pyaudio

“`

If you plan to use a USB microphone, it should work plug-and-play. For optimal performance, test your microphone using:

“`

arecord -l

“`

This command lists available recording devices. Make note of your microphone’s card and device numbers, as we’ll need them later for configuration. Remember to reboot your Raspberry Pi after installing all components to ensure everything is properly initialized.

Model Training and Configuration

The training process for a machine learning voice recognition model begins with data preparation. You’ll need a substantial dataset of voice samples, ideally containing diverse accents, speech patterns, and background noise conditions to ensure robust recognition capabilities. Most successful models require at least 1,000 labeled audio samples for basic functionality.

Configuration starts with selecting appropriate hyperparameters. For Raspberry Pi implementations, you’ll want to balance model complexity with available computing resources. Common parameters include learning rate (typically between 0.001 and 0.01), batch size (32-128 samples), and the number of training epochs (usually 50-100 for basic models).

Feature extraction is crucial for voice recognition. The most effective approach uses Mel-frequency cepstral coefficients (MFCCs) to convert audio signals into features the model can process. Configure your MFCC settings to extract 13-40 coefficients, depending on your accuracy requirements and processing constraints.

During training, implement validation splits (typically 80% training, 20% validation) to monitor model performance and prevent overfitting. Consider using techniques like dropout (0.2-0.5 rate) and early stopping to improve model generalization. For Raspberry Pi deployments, quantization can help reduce model size while maintaining acceptable accuracy levels.

Monitor key metrics during training, including accuracy, precision, and recall. A well-trained model should achieve at least 85% accuracy on validation data for basic command recognition tasks. If performance falls short, adjust your hyperparameters or consider increasing your training data volume.

Remember to save checkpoints during training to preserve progress and enable model fine-tuning later. Export your final model in a format compatible with your deployment environment, such as TensorFlow Lite for optimal performance on Raspberry Pi.

Optimizing Performance

Processing Optimization

When working with voice recognition on a Raspberry Pi, optimizing processing performance is crucial for real-time applications. The Pi’s limited audio processing capabilities require careful consideration to achieve smooth operation.

Start by reducing audio sample rates to 16kHz, which is sufficient for voice recognition while significantly decreasing processing load. Implement a voice activity detection (VAD) system to process audio only when speech is detected, conserving resources during silence.

Consider using lighter machine learning models specifically designed for edge devices. TensorFlow Lite and Edge TPU models offer excellent performance while maintaining reasonable accuracy. Enable hardware acceleration when available – the Pi 4’s GPU can handle certain processing tasks more efficiently than the CPU.

Memory management is equally important. Use streaming processing instead of loading entire audio files into memory, and implement efficient buffer sizes that balance responsiveness with resource usage. Clear unused variables and implement garbage collection to prevent memory leaks.

For Python implementations, utilize NumPy’s optimized arrays instead of regular lists, and consider using PyPy interpreter for faster execution. If possible, compile performance-critical portions of your code to C using Cython for additional speed improvements.

These optimizations can help achieve near real-time voice recognition performance, even on modest hardware like the Raspberry Pi.

Accuracy Improvements

Several key techniques have emerged to enhance voice recognition accuracy in machine learning systems. Data augmentation plays a crucial role by artificially expanding training datasets through methods like adding background noise, changing speech speed, and applying different acoustic environments. This helps create more robust models that perform better in real-world conditions.

Pre-processing techniques, such as noise reduction and signal normalization, significantly improve recognition accuracy. By cleaning the audio input before processing, these methods help the system focus on relevant speech patterns while filtering out unwanted interference.

Transfer learning has proven particularly effective for Raspberry Pi implementations. By using pre-trained models as a starting point and fine-tuning them for specific use cases, developers can achieve higher accuracy without requiring extensive computational resources or training data.

Another important approach is the implementation of context-aware recognition. By incorporating language models and contextual cues, the system can better predict words based on their surrounding context, reducing common recognition errors.

Real-time adaptation techniques allow the system to learn from user corrections and adjust its recognition patterns accordingly. This continuous learning approach helps the system improve its accuracy over time, particularly for specific users or use cases.

Finally, ensemble methods combining multiple recognition models have shown promising results. By leveraging the strengths of different approaches and voting mechanisms, these systems can achieve higher accuracy than single-model implementations.

Real-World Applications

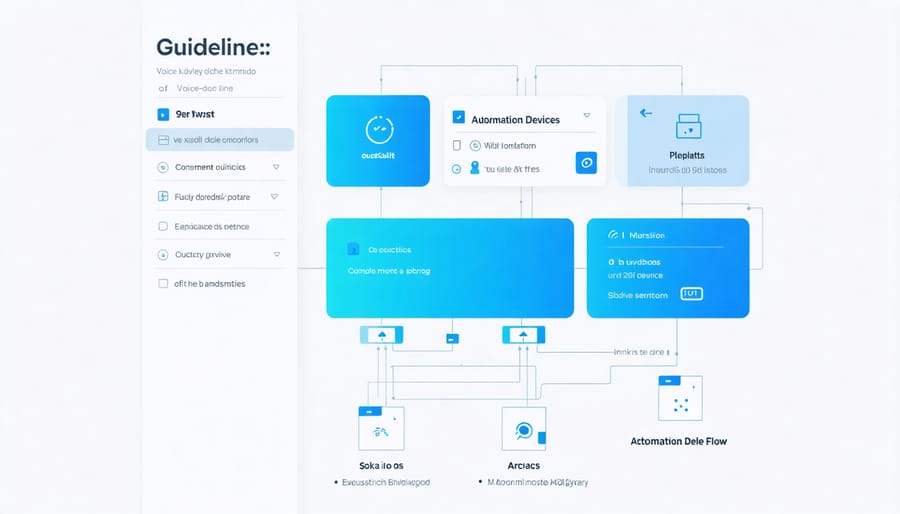

Home Automation Integration

Voice recognition has become a cornerstone of modern smart home integration, offering an intuitive way to control various household devices. By implementing machine learning voice recognition on your Raspberry Pi, you can create a customized home automation system that responds to your voice commands.

Popular voice control applications include adjusting lighting, managing thermostats, controlling entertainment systems, and operating smart appliances. The beauty of using a Raspberry Pi for this purpose lies in its flexibility and ability to integrate with various protocols like WiFi, Zigbee, and Z-Wave.

To set up voice-controlled home automation, you’ll need to combine your voice recognition model with appropriate APIs and controllers for your smart devices. Popular frameworks like Home Assistant or OpenHAB can serve as the bridge between your voice commands and device actions. These platforms offer extensive device compatibility and can be easily configured to respond to custom voice triggers.

For optimal performance, consider implementing wake word detection to prevent false activations and create specific command patterns that are easily distinguishable from regular conversation. You can also add confirmation feedback through LED indicators or speaker responses to acknowledge successful command recognition.

Remember to implement proper error handling and fallback mechanisms to ensure your system remains reliable even when voice recognition accuracy isn’t perfect. This creates a more robust and user-friendly home automation experience.

Custom Voice Commands

Custom voice commands add a personal touch to your machine learning voice recognition system, allowing you to create specific triggers and responses tailored to your needs. The process begins with collecting sample audio data for your desired commands. Start by recording multiple variations of each command, considering different intonations and speaking speeds to improve recognition accuracy.

To implement custom commands, you’ll need to create a training dataset. Record at least 20-30 samples per command in a quiet environment, ensuring consistent audio quality. These samples should be organized in separate folders for each command, making it easier to train your model effectively.

The next step involves preprocessing your audio data. Convert your recordings into spectrograms or mel-frequency cepstral coefficients (MFCCs), which help your model identify distinct patterns in speech. Many popular libraries like librosa or tensorflow.audio provide tools for this conversion.

When training your model, use transfer learning to build upon existing voice recognition models. This approach saves time and computational resources while maintaining high accuracy. Fine-tune the model using your custom dataset, adjusting parameters like learning rate and batch size to optimize performance.

Testing and validation are crucial. Create a separate test dataset to evaluate your model’s performance. Monitor metrics like accuracy and false positive rates, and iterate on your training process if needed. Remember to implement error handling for misrecognized commands and provide user feedback for a smoother experience.

Machine learning voice recognition has revolutionized how we interact with technology, and its implementation on Raspberry Pi platforms continues to evolve rapidly. From basic speech-to-text functions to complex natural language processing, the possibilities are expanding every day. As we’ve explored, getting started with voice recognition on Raspberry Pi is now more accessible than ever, thanks to available libraries and frameworks that simplify the development process.

Looking ahead, we can expect even more exciting developments in this field, including improved accuracy rates, better handling of different accents and languages, and reduced processing requirements. For hobbyists and developers, this means more opportunities to create sophisticated voice-controlled projects while maintaining the cost-effectiveness and flexibility of the Raspberry Pi platform.

Whether you’re building a smart home assistant or developing educational tools, the combination of machine learning and voice recognition opens up endless possibilities for creative and practical applications. Start small, experiment often, and don’t be afraid to push the boundaries of what’s possible with these powerful technologies.