Edge AI vision systems are revolutionizing how we process and analyze visual data by bringing artificial intelligence directly to cameras and sensors, eliminating the need for cloud connectivity. By performing machine learning inference on edge devices like Raspberry Pi, these systems deliver real-time insights while maintaining data privacy and reducing bandwidth costs.

Transform your Raspberry Pi into a powerful edge vision system by combining low-cost cameras, optimized ML frameworks like TensorFlow Lite, and purpose-built vision processors. Whether you’re building an intelligent security camera, automated quality control system, or smart home monitor, edge AI vision provides the perfect balance of processing power and energy efficiency.

The technology has evolved significantly, now enabling complex tasks like object detection, facial recognition, and motion analysis to run smoothly on resource-constrained devices. With frameworks specifically designed for edge deployment and growing support for hardware acceleration, developers can achieve near-cloud performance without the latency and privacy concerns of traditional cloud-based solutions.

As we explore the implementation of edge AI vision systems, we’ll focus on practical, cost-effective solutions that leverage the full potential of your Raspberry Pi while maintaining reliable, real-time performance for your specific use case.

What Makes Edge AI Vision Special on Raspberry Pi

Processing Power vs. Cloud Dependencies

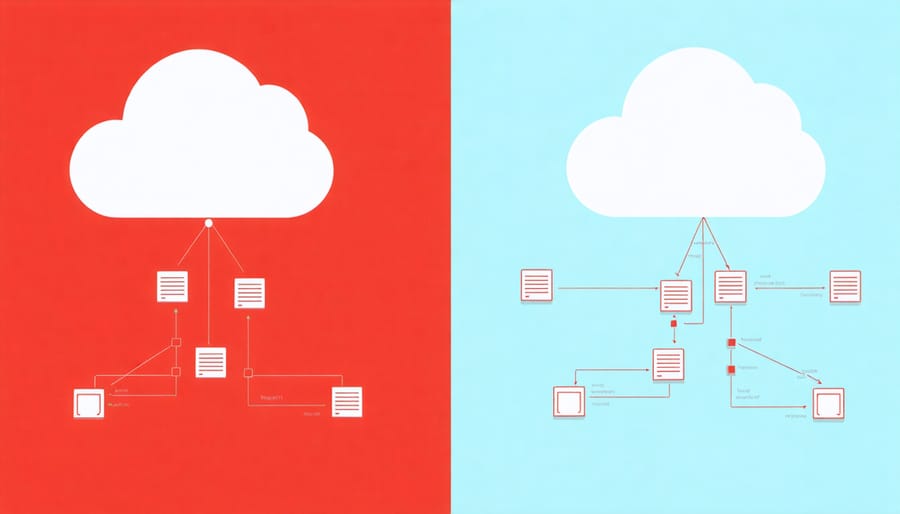

Edge-AI-Vision systems face a crucial trade-off between local processing power and cloud dependency. While cloud solutions offer virtually unlimited computational resources, they require consistent internet connectivity and can introduce latency issues. Local processing through edge AI processing capabilities eliminates these concerns but must work within the constraints of the device’s hardware.

The advantage of processing vision data locally becomes apparent in real-time applications like security systems or autonomous robots. These systems can respond instantly to visual inputs without waiting for cloud communication. Additionally, local processing ensures data privacy and reduces bandwidth costs, making it ideal for sensitive applications or remote deployments.

However, more complex AI models might strain edge devices, necessitating optimization techniques or model compression. The key is finding the right balance – using lightweight models for immediate processing on the edge while offloading more intensive tasks to the cloud when necessary. This hybrid approach often provides the best compromise between performance and practicality.

Real-time Processing Capabilities

Real-time processing is a cornerstone of edge AI vision applications, enabling devices like Raspberry Pi to analyze video feeds and make decisions instantly. These systems typically achieve processing speeds between 15-30 frames per second, depending on the model complexity and hardware capabilities. This real-time performance is crucial for applications such as object detection, facial recognition, and motion tracking.

To maximize processing efficiency, edge AI vision systems employ various optimization techniques. These include model quantization, which reduces the precision of calculations while maintaining acceptable accuracy, and hardware acceleration using dedicated neural processing units (NPUs) or GPU cores. The Raspberry Pi 4, for instance, can leverage its GPU to accelerate certain AI vision tasks, significantly improving performance.

Modern edge AI frameworks also utilize techniques like frame skipping and selective processing to maintain real-time performance. Instead of processing every single frame, the system might analyze every second or third frame, providing a balance between responsiveness and resource utilization. This approach is particularly effective for applications where slight delays are acceptable, such as smart doorbell cameras or automated inspection systems.

Essential Hardware for Edge AI Vision Projects

Camera Options and Specifications

When implementing edge AI vision projects, selecting the right camera is crucial for optimal performance. The Raspberry Pi Camera Module series, including the latest v3 models, offers excellent compatibility and features specifically designed for machine learning applications. These modules come in both standard and wide-angle variants, providing flexibility for different use cases.

For those seeking a high-quality camera setup, the 12.3MP HQ Camera Module delivers outstanding image quality with its Sony IMX477 sensor. This option is particularly valuable for projects requiring precise object detection or detailed image analysis.

Third-party cameras compatible with the CSI interface also work well, though you’ll need to ensure proper driver support. USB cameras offer another viable alternative, with models like the Logitech C920 providing reliable performance for edge AI applications.

Key specifications to consider include:

– Resolution: 720p minimum for basic object detection

– Frame rate: 30fps or higher for real-time processing

– Field of view: 60-90 degrees for general applications

– Low-light performance: Essential for indoor or nighttime operations

– Focus capabilities: Auto-focus preferred for dynamic environments

Remember that higher specifications don’t always translate to better AI performance. Often, a balance between resolution, frame rate, and processing capabilities yields the most efficient results for edge deployment.

Processing Add-ons and Accessories

To enhance your edge AI vision system’s capabilities, several add-ons and accessories can significantly boost performance and functionality. The Raspberry Pi Camera Module v3 is an essential upgrade, offering superior image quality and multiple lens options for different use cases. For projects requiring wider fields of view, the 120-degree wide-angle lens variant is particularly useful in surveillance and motion detection applications.

Neural compute sticks, such as the Intel Neural Compute Stick 2 or Google Coral USB Accelerator, can dramatically improve inference speeds by offloading AI processing from the main processor. These plug-and-play devices connect via USB and can reduce processing times by up to 10x in some applications.

For projects requiring enhanced lighting control, consider adding infrared LED arrays or adjustable LED panels. These are particularly valuable for consistent performance in varying light conditions or nighttime operations. A cooling solution, such as a heatsink with a small fan, is recommended when running intensive AI models to maintain optimal performance and prevent thermal throttling.

Storage expansion through high-speed USB 3.0 SSDs or large-capacity SD cards is crucial for projects involving extensive data collection or model storage. Consider using powered USB hubs when connecting multiple accessories to ensure stable power delivery to all components. For portable applications, a suitable power bank with at least 10,000mAh capacity will provide several hours of autonomous operation.

Popular Edge AI Vision Frameworks

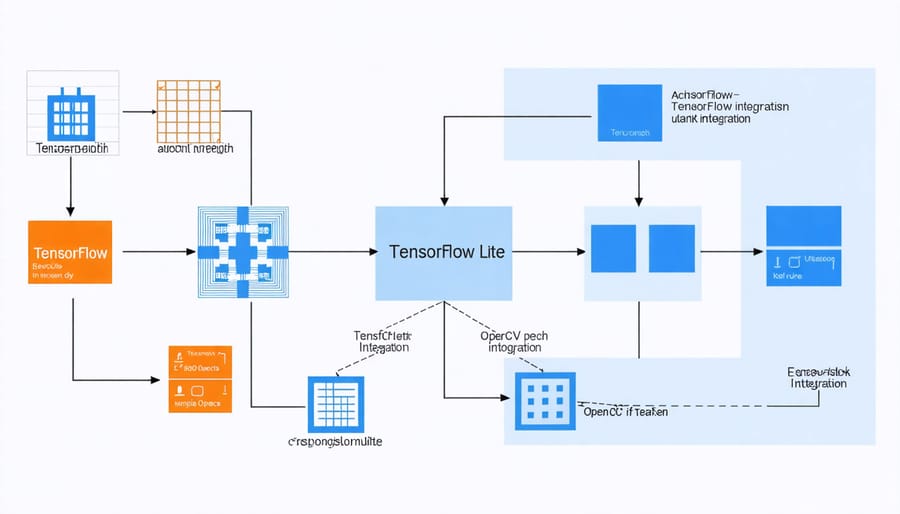

TensorFlow Lite and OpenCV

TensorFlow Lite and OpenCV form the backbone of many modern computer vision applications on edge devices. TensorFlow Lite, optimized for resource-constrained environments, enables running trained AI models efficiently on your Raspberry Pi. It supports model compression and quantization, helping maintain performance while reducing computational demands.

OpenCV complements TensorFlow Lite by providing essential image processing capabilities. Its Python bindings make it accessible for beginners while offering powerful features like image filtering, object detection, and real-time video processing. The combination of these frameworks allows you to create sophisticated vision projects without overwhelming your Pi’s resources.

To get started, install both frameworks using pip:

“`

pip install tensorflow-lite

pip install opencv-python

“`

These tools work seamlessly together – OpenCV handles image capture and preprocessing, while TensorFlow Lite runs the AI inference. This partnership is particularly effective for projects like facial recognition, object tracking, and motion detection, where real-time processing is crucial.

Other Framework Options

While TensorFlow Lite and OpenCV are popular choices, several other frameworks offer unique advantages for edge AI vision projects. MediaPipe provides ready-to-use solutions for face detection, pose estimation, and hand tracking, making it ideal for interactive applications. PyTorch Mobile excels in deep learning tasks and offers excellent model optimization capabilities, though it may require more computational resources.

For simpler projects, Edge Impulse provides an intuitive platform for training and deploying custom vision models without extensive coding. Intel’s OpenVINO toolkit stands out for its optimization capabilities on x86 architectures, though its compatibility with Raspberry Pi is limited.

ONNX Runtime serves as a versatile option for running models from different frameworks, offering good performance and cross-platform compatibility. For those prioritizing speed, Coral Edge TPU frameworks provide exceptional performance when paired with Google’s Edge TPU hardware.

Consider your project’s specific requirements, processing power needs, and expertise level when choosing a framework. Many developers combine multiple frameworks to leverage their respective strengths.

Security and Privacy Considerations

When implementing edge AI vision systems, security and privacy considerations must be at the forefront of your project planning. Following proper IoT security best practices is essential to protect both your device and the data it processes.

First, ensure physical security of your edge device. Since these systems often process sensitive visual data, restrict access to the hardware and secure it in a protected location. Use tamper-evident cases when possible, especially for outdoor installations.

Data encryption is crucial at every stage – both for stored data and during transmission. Implement strong encryption protocols for any visual data being sent to cloud services or other devices. Consider using hardware-accelerated encryption when available to maintain processing efficiency.

Regular firmware and software updates are vital for maintaining security. Create an update schedule and automate the process when possible, but always verify updates before deployment to avoid system disruptions.

Access control should be implemented at multiple levels. Use strong authentication for device access, API endpoints, and any connected services. Consider implementing role-based access control if multiple users need different levels of system access.

Privacy protection is particularly important for vision systems. Implement masking or blurring for sensitive areas in captured images, and ensure compliance with relevant privacy regulations like GDPR or CCPA. Consider implementing features that allow for automatic deletion of processed data that doesn’t need to be stored.

Network security should include proper firewall configuration, network segregation, and secure protocols for data transmission. When possible, operate your edge AI system on an isolated network segment to minimize exposure to potential threats.

Regularly audit your system’s security measures and maintain logs of device access and data processing activities. This helps in detecting potential security breaches and ensures compliance with privacy requirements.

Future-Proofing Your Edge AI Vision Projects

As edge AI vision technology evolves rapidly, it’s crucial to build projects that can adapt and grow with changing requirements. Start by choosing modular hardware components that allow for easy upgrades – consider using standardized interfaces and connectors that won’t become obsolete quickly. When selecting cameras and sensors, opt for those with broad compatibility and ongoing manufacturer support.

On the software side, implement a containerized approach using tools like Docker to isolate your application components. This makes it easier to update individual parts of your system without affecting the whole. Structure your code with clear separation between the AI model, inference engine, and application logic. This modularity allows you to swap out models or upgrade processing components as needed.

Version control is essential – use Git to track changes and maintain documentation of your project’s evolution. Regular testing and benchmarking help identify performance bottlenecks early, allowing you to optimize before scaling becomes necessary. Consider implementing automatic model retraining pipelines to keep your AI current with new data.

Storage and data management strategies are crucial for long-term success. Implement efficient data preprocessing and filtering at the edge to reduce unnecessary storage and transmission. Plan for data archival and cleanup procedures from the start to prevent system bloat over time.

Keep an eye on emerging edge AI frameworks and standards. While TensorFlow Lite and OpenVINO are popular now, be prepared to adapt to new tools that might offer better performance or features. Follow a cloud-native approach where possible, making it easier to shift processing between edge and cloud as needs change.

Finally, consider power consumption and hardware limitations from the beginning. Design your system to gracefully degrade performance rather than fail completely under resource constraints. This approach ensures your project remains functional even as processing demands increase over time.

Edge AI vision technology represents an exciting frontier in embedded computing, offering remarkable possibilities for makers and developers alike. Throughout this guide, we’ve explored the essential components needed to bring computer vision capabilities to edge devices, from selecting the right hardware to implementing efficient AI models and optimizing performance.

By leveraging the power of Raspberry Pi and similar edge devices, you can now build sophisticated vision systems that operate independently of cloud services, ensuring faster response times and enhanced privacy. The combination of frameworks like TensorFlow Lite and OpenCV, coupled with dedicated AI accelerators, has made it possible to create projects ranging from smart security cameras to automated quality control systems.

As you begin your journey into edge AI vision, remember to start with smaller projects and gradually build up to more complex applications. Focus on optimizing your models for edge deployment and always consider the balance between accuracy and performance. The field is rapidly evolving, with new tools and frameworks emerging regularly, so stay connected with the community and keep exploring new possibilities.

Whether you’re a hobbyist, educator, or professional developer, the skills you’ve learned here will serve as a solid foundation for creating innovative AI vision solutions. Take the next step by implementing your first edge AI vision project and joining the growing community of edge AI developers.